Can AI Finally Deliver on Precision Medicine’s Promise?

Yes, But Only If We Nail Both the Biology and Decision Support

Of all the healthcare revolutions that have been “just around the corner” for the past 30 years (see also: “value-based care,” “bending the cost curve,” and “primary care nirvana”), precision medicine is arguably the most exciting and vexing.

Ever since the launch of the Human Genome Project in 1990, pundits have predicted a revolution in personalized care – a world in which every person receives treatments or prevention recommendations tailored to their own individual characteristics, ranging from genetic makeup to environmental exposures.

However, the complexity of human biology, coupled with limited computerized data and immature digital tools, has led precision medicine to remain more aspiration than clinical reality for nearly two generations. The lone exception is cancer, where modern therapeutic approaches are determined, at least in part, by genotype testing and other sophisticated tests of both the tumor and blood.

But virtually every other field of medicine remains remarkably imprecise. In fact, I can think of only one example of precision medicine that comes up regularly in my own practice as a hospitalist: A blood thinner (clopidogrel, brand name Plavix) sometimes used after cardiac stenting simply doesn’t work in patients with certain mutations of the gene CYP2C19. That’s about it.

AI is bringing new hope to the field, thanks to three converging forces. The first is the increasing availability of large datasets – including data from billing records, clinical data in the EHR, data from sensors and wearables, genetic data, and data on social determinants of health. The second is the advent of modern AI tools capable of extracting this data and deriving insights from it. The third, and one without which the first two won’t matter: more sophisticated, user-friendly clinical decision support systems powered by AI.

An Example: Customized Recommendations for Managing Hypertension

Consider our management of high blood pressure, one of the most common problems in clinical medicine. Today’s published guidelines outline the systolic and diastolic blood pressures above which treatment is recommended, the initial approach (generally diet and exercise), and the medications to prescribe if drugs are needed. In the case of hypertension, four different classes of medications are deemed acceptable, and choosing among them for a given patient is a coin flip.

Our historical approach would have been to study, say, one thousand patients with high blood pressure, treating half with Drug A and half with Drug B. The investigators would then follow the two groups for five years, assessing outcomes like heart attacks and strokes. In the end, if Drug A worked better than B in the whole group, a recommendation to use Drug A for every eligible adult would be chiseled into clinical guidelines by a venerable medical society.

But what if, instead of one thousand patients, we could analyze the records of 10 million. The result might be guidelines that treat two patients with equivalent blood pressures in very different ways. Therapy would be customized not based simply on the diagnosis of hypertension, but on detailed measures of the patient’s genetic makeup, biomarkers, exposures, and the presence of other risk factors for heart disease.

To do this, we would leverage AI’s capabilities to analyze vast numbers of patient records simultaneously. Machine learning algorithms can detect subtle correlations between treatment outcomes (not just blood pressure control but stroke and heart attack risk over time) and numerous variables – from EKG findings to genetic markers to environmental factors – that would be impossible to spot in traditional clinical trials. This approach might reveal that Drug A works exceptionally well in patients with one gene pattern but is ineffective in those with a different makeup. Other patients might have Drug B, C, or D recommended. A few might be told that treatment is unnecessary because their particular risk of heart disease remains low without it.

Note that this scenario doesn’t depend on the availability of new-fangled generative AI. Instead, it’s based on the type of predictive AI/machine learning we’ve been deploying for a few decades, the kind that takes one big bag of data and correlates it with another. (A classic example: our ability to determine cardiac ejection fraction from an EKG, drawn from correlating the results of millions of EKGs with similar numbers of echocardiogram results.)

So, you might ask, if this advance didn’t require the invention of ChatGPT and its brethren, why haven’t we been able to sort this out until recently? It turns out that generative AI has helped by allowing us to “understand” unstructured data (such as the information in a physician’s note). This ability has expanded the breadth and nuance of the clinical data that can be fed into machine learning algorithms.

Another crucial factor was the expanding size of the relevant datasets. For example, through a tool called Cosmos, Epic now makes available deidentified medical records on 300 million patients and their 17.5 billion clinical encounters, allowing researchers to search for associations between predictors and outcomes that would not have been apparent in smaller cohorts. Of course, it’s important to apply hard-nosed epidemiological rigor and prospective validation to such exploratory analyses, since brute force analyses of massive datasets can spit out associations that are coincidental or otherwise spurious.

Precision Prediction vs. Precision Medicine

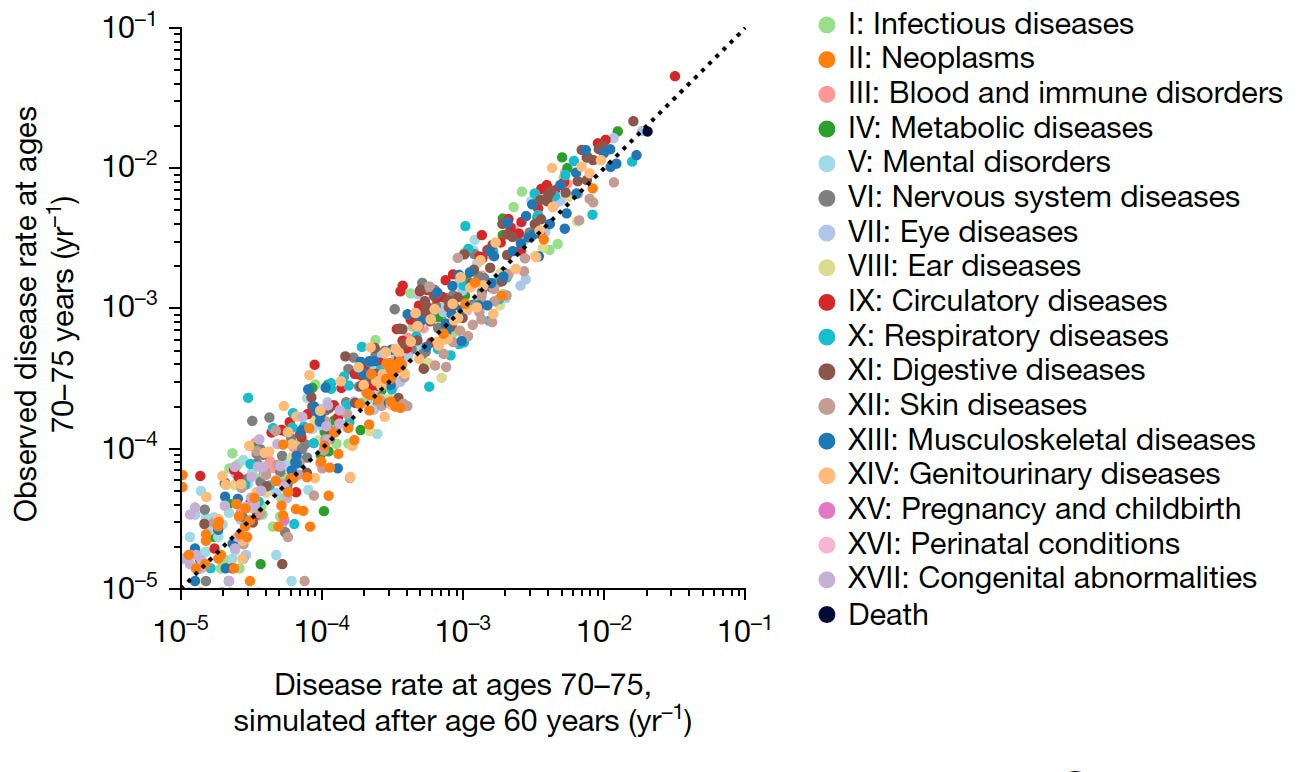

Over the past few years, progress has occurred more rapidly in what I consider precision prediction than in precision medicine. In other words, we’re starting to see reports of AI making startlingly accurate predictions of outcomes in various populations. Just in the past several months, one report in JAMA Network Open found that a series of sequential mammograms could accurately predict breast cancer risks, even when none of the mammograms showed a cancer. Another study, this one published in Nature, found that AI could predict, with reasonable accuracy, which patients would develop any of 1,000 different diseases, and when.

While results like these represent epidemiologic tours de force (see a lovely analysis of the latter study by Eric Topol), I remain a bit skeptical of the ultimate impact of AI-based prediction models. It’s not that I don’t think the results are accurate (and they’ll no doubt continue to improve), but I wonder whether these kinds of results will be transformative in the real world of healthcare. Zeke Emanuel and I wrote a paper in JAMA several years ago in which we pushed back on the notion that all healthcare needed was more accurate AI-enabled predictions. Drawing on an analogy from immunology, we wrote:

The body needs to identify foreign substances and organisms. But the crucial step is the activation of the immune system’s effector arm – the antibody-and cell-mediated mechanisms, the complex array of cells, cytokines, complement, and more – that attack, neutralize, kill, and eliminate the intruders. Data, analytics, AI, and machine learning are about identification. But they have little role in establishing the structures, culture, and incentives necessary to change the behaviors of clinicians and patients.

Could AI-based predictions make a meaningful difference? Sure, if we treat them as only part of the problem we’re trying to solve. Such predictions will be necessary – but not sufficient – for creating bespoke screening and treatment strategies. For example, knowing that one person’s clinical and genetic profile puts them at higher risk of a heart attack in the next 10 years might trigger more careful screening or customized treatment recommendations for primary prevention. Similarly, a patient whose mammograms predict a higher risk of breast cancer is likely to benefit from more frequent screening studies and, potentially, preventive treatments.

AI may also help inform – and then supply – behavioral nudges. Such nudges may be guided by determining what has worked previously with you, or in patients like you. Given the billions of dollars that companies like Meta spend on behavioral nudges designed to encourage people to stay engaged with Facebook or to purchase certain products, it seems reasonable to believe we can develop AI-based strategies to nudge people toward healthy behaviors or effective prevention strategies. Of course, these strategies can be used to manipulate patients; we’ll need strong guardrails and robust informed consent.

Why None of This Will Matter if We Don’t Nail Decision Support

As you’ve seen, we need modern AI to disentangle the biology and epidemiology of precision medicine. But we also need its help in turning all this into a workable system for both doctors and patients. Here’s why:

Research has shown that the human brain can hold only about seven discrete pieces of information in working memory at once. This cognitive straitjacket wasn’t a problem when treating high blood pressure meant choosing among a few standard medications, perhaps with one or two variations on the theme (the choice might vary when treating hypertension in a patient with early kidney disease or diabetes, for example).

However, in a world in which treatment decisions might depend on the interactions of dozens of genetic markers, protein levels, environmental factors, and comorbidities, even the smartest and most conscientious physician won’t be able to process all the relevant variables without the help of a digital wingman. The same principle applies to screening guidelines, which today tend to be one-size-fits-all (such as starting colonoscopies at age 45), but in the future are likely to be customized. This will introduce a level of complexity that no individual clinician can possibly master. At that point, we’ll be utterly dependent on AI-assisted computerized decision support to guide us to the right tests and treatments for each patient. And, to the extent that our recommendations for patient behavior (diet, exercise, medications, and other preventive strategies) are customized, they too will require an assist from AI.

While this is all very exciting, the risk of overhype is real. My guess is that it will be at least five – and possibly ten – years before we have accurate and reliable individualized testing and treatment recommendations across a wide range of conditions, and then integrate these into practical, usable AI-enabled decision-support systems. That said, I believe that modern AI – applied to today’s massive and diverse datasets – will finally allow this vision to move from “around the corner” into mainstream medical practice.